Memgraph

Memgraph 是一個開放原始碼圖形資料庫,針對動態分析環境進行調整,並與 Neo4j 相容。為了查詢資料庫,Memgraph 使用 Cypher - 最廣泛採用、完整指定且開放的屬性圖形資料庫查詢語言。

本筆記本將向您展示如何使用自然語言查詢 Memgraph 以及如何從您的非結構化資料建構知識圖譜。

但首先,請務必完成所有設定。

設定

為了瀏覽本指南,您需要安裝 Docker 和 Python 3.x。

若要首次快速執行 Memgraph Platform (Memgraph 資料庫 + MAGE 程式庫 + Memgraph Lab),請執行下列操作

在 Linux/MacOS 上

curl https://install.memgraph.com | sh

在 Windows 上

iwr https://windows.memgraph.com | iex

這兩個命令都會執行一個腳本,該腳本會將 Docker Compose 檔案下載到您的系統,並在兩個不同的容器中建置並啟動 memgraph-mage 和 memgraph-lab Docker 服務。現在您已啟動並執行 Memgraph!請參閱 Memgraph 文件,以深入瞭解安裝過程。

若要使用 LangChain,請安裝並匯入所有必要的套件。我們將使用套件管理器 pip,以及 --user 旗標,以確保適當的權限。如果您已安裝 Python 3.4 或更新版本,則預設會包含 pip。您可以使用下列命令安裝所有必要的套件

pip install langchain langchain-openai neo4j --user

您可以選擇在本筆記本中執行提供的程式碼區塊,或使用個別的 Python 檔案來實驗 Memgraph 和 LangChain。

自然語言查詢

Memgraph 與 LangChain 的整合包括自然語言查詢。若要使用它,請先完成所有必要的匯入。我們將在程式碼中出現時討論它們。

首先,例項化 MemgraphGraph。此物件會保留與正在執行的 Memgraph 執行個體的連線。請務必正確設定所有環境變數。

import os

from langchain_community.chains.graph_qa.memgraph import MemgraphQAChain

from langchain_community.graphs import MemgraphGraph

from langchain_core.prompts import PromptTemplate

from langchain_openai import ChatOpenAI

url = os.environ.get("MEMGRAPH_URI", "bolt://127.0.0.1:7687")

username = os.environ.get("MEMGRAPH_USERNAME", "")

password = os.environ.get("MEMGRAPH_PASSWORD", "")

graph = MemgraphGraph(

url=url, username=username, password=password, refresh_schema=False

)

refresh_schema 最初設定為 False,因為資料庫中仍然沒有資料,而且我們想要避免不必要的資料庫呼叫。

填入資料庫

若要填入資料庫,請先確認它是空的。最有效率的方法是切換到記憶體內分析儲存模式,捨棄圖形,然後返回記憶體內交易模式。深入瞭解 Memgraph 的儲存模式。

我們將新增到資料庫的資料是關於不同類型、可在各種平台上取得且與發行商相關的電玩遊戲。

# Drop graph

graph.query("STORAGE MODE IN_MEMORY_ANALYTICAL")

graph.query("DROP GRAPH")

graph.query("STORAGE MODE IN_MEMORY_TRANSACTIONAL")

# Creating and executing the seeding query

query = """

MERGE (g:Game {name: "Baldur's Gate 3"})

WITH g, ["PlayStation 5", "Mac OS", "Windows", "Xbox Series X/S"] AS platforms,

["Adventure", "Role-Playing Game", "Strategy"] AS genres

FOREACH (platform IN platforms |

MERGE (p:Platform {name: platform})

MERGE (g)-[:AVAILABLE_ON]->(p)

)

FOREACH (genre IN genres |

MERGE (gn:Genre {name: genre})

MERGE (g)-[:HAS_GENRE]->(gn)

)

MERGE (p:Publisher {name: "Larian Studios"})

MERGE (g)-[:PUBLISHED_BY]->(p);

"""

graph.query(query)

[]

請注意 graph 物件如何持有 query 方法。該方法會在 Memgraph 中執行查詢,並且 MemgraphQAChain 也會使用它來查詢資料庫。

重新整理圖形結構描述

由於新的資料是在 Memgraph 中建立的,因此有必要重新整理結構描述。產生的結構描述將由 MemgraphQAChain 使用,以指示 LLM 更佳地產生 Cypher 查詢。

graph.refresh_schema()

為了讓您熟悉資料並驗證更新的圖形結構描述,您可以使用下列陳述式列印它

print(graph.get_schema)

Node labels and properties (name and type) are:

- labels: (:Platform)

properties:

- name: string

- labels: (:Genre)

properties:

- name: string

- labels: (:Game)

properties:

- name: string

- labels: (:Publisher)

properties:

- name: string

Nodes are connected with the following relationships:

(:Game)-[:HAS_GENRE]->(:Genre)

(:Game)-[:PUBLISHED_BY]->(:Publisher)

(:Game)-[:AVAILABLE_ON]->(:Platform)

查詢資料庫

若要與 OpenAI API 互動,您必須將您的 API 金鑰設定為環境變數。這可確保您的請求獲得適當的授權。您可以在此處找到有關取得您的 API 金鑰的更多資訊。若要設定 API 金鑰,您可以使用 Python os 套件

os.environ["OPENAI_API_KEY"] = "your-key-here"

如果您在 Jupyter 筆記本中執行程式碼,請執行上述程式碼片段。

接下來,建立 MemgraphQAChain,它將在根據您的圖形資料進行問答過程中使用。temperature parameter 設定為零,以確保可預測且一致的答案。您可以將 verbose 參數設定為 True,以接收有關查詢產生的更詳細訊息。

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

model_name="gpt-4-turbo",

allow_dangerous_requests=True,

)

現在您可以開始提問了!

response = chain.invoke("Which platforms is Baldur's Gate 3 available on?")

print(response["result"])

MATCH (:Game{name: "Baldur's Gate 3"})-[:AVAILABLE_ON]->(platform:Platform)

RETURN platform.name

Baldur's Gate 3 is available on PlayStation 5, Mac OS, Windows, and Xbox Series X/S.

response = chain.invoke("Is Baldur's Gate 3 available on Windows?")

print(response["result"])

MATCH (:Game{name: "Baldur's Gate 3"})-[:AVAILABLE_ON]->(:Platform{name: "Windows"})

RETURN "Yes"

Yes, Baldur's Gate 3 is available on Windows.

鏈修飾詞

若要修改您的鏈的行為並取得更多內容或其他資訊,您可以修改鏈的參數。

傳回直接查詢結果

return_direct 修飾詞指定是否要傳回已執行的 Cypher 查詢的直接結果,還是已處理的自然語言回應。

# Return the result of querying the graph directly

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

return_direct=True,

allow_dangerous_requests=True,

model_name="gpt-4-turbo",

)

response = chain.invoke("Which studio published Baldur's Gate 3?")

print(response["result"])

MATCH (g:Game {name: "Baldur's Gate 3"})-[:PUBLISHED_BY]->(p:Publisher)

RETURN p.name

[{'p.name': 'Larian Studios'}]

傳回查詢中繼步驟

除了初始查詢結果之外,return_intermediate_steps 鏈修飾詞還透過包含查詢的中繼步驟來增強傳回的回應。

# Return all the intermediate steps of query execution

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

allow_dangerous_requests=True,

return_intermediate_steps=True,

model_name="gpt-4-turbo",

)

response = chain.invoke("Is Baldur's Gate 3 an Adventure game?")

print(f"Intermediate steps: {response['intermediate_steps']}")

print(f"Final response: {response['result']}")

MATCH (:Game {name: "Baldur's Gate 3"})-[:HAS_GENRE]->(:Genre {name: "Adventure"})

RETURN "Yes"

Intermediate steps: [{'query': 'MATCH (:Game {name: "Baldur\'s Gate 3"})-[:HAS_GENRE]->(:Genre {name: "Adventure"})\nRETURN "Yes"'}, {'context': [{'"Yes"': 'Yes'}]}]

Final response: Yes.

限制查詢結果的數量

當您想要限制查詢結果的最大數量時,可以使用 top_k 修飾詞。

# Limit the maximum number of results returned by query

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

top_k=2,

allow_dangerous_requests=True,

model_name="gpt-4-turbo",

)

response = chain.invoke("What genres are associated with Baldur's Gate 3?")

print(response["result"])

MATCH (:Game {name: "Baldur's Gate 3"})-[:HAS_GENRE]->(g:Genre)

RETURN g.name;

Adventure, Role-Playing Game

進階查詢

隨著解決方案的複雜性增加,您可能會遇到需要仔細處理的不同用例。確保應用程式的可擴充性對於維持流暢的使用者流程而不會產生任何故障至關重要。

讓我們再次例項化我們的鏈,並嘗試提出使用者可能潛在提出的問題。

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

model_name="gpt-4-turbo",

allow_dangerous_requests=True,

)

response = chain.invoke("Is Baldur's Gate 3 available on PS5?")

print(response["result"])

MATCH (:Game{name: "Baldur's Gate 3"})-[:AVAILABLE_ON]->(:Platform{name: "PS5"})

RETURN "Yes"

I don't know the answer.

產生的 Cypher 查詢看起來不錯,但我們沒有收到任何回應中的資訊。這說明了使用 LLM 時常見的挑戰 - 使用者措辭查詢的方式與資料儲存方式之間的不一致。在這種情況下,使用者感知與實際資料儲存之間的差異可能會導致不符。提示詞精煉是微調模型的提示詞以更好地掌握這些區別的過程,是一個有效解決此問題的解決方案。透過提示詞精煉,模型在產生精確且相關的查詢方面獲得更高的熟練度,從而成功擷取所需的資料。

提示詞精煉

為了解決這個問題,我們可以調整 QA 鏈的初始 Cypher 提示詞。這涉及在 LLM 上新增有關使用者如何參照特定平台(例如我們的案例中的 PS5)的指引。我們使用 LangChain PromptTemplate 來達成此目的,建立修改後的初始提示詞。然後,將此修改後的提示詞作為引數提供給我們精煉的 MemgraphQAChain 執行個體。

MEMGRAPH_GENERATION_TEMPLATE = """Your task is to directly translate natural language inquiry into precise and executable Cypher query for Memgraph database.

You will utilize a provided database schema to understand the structure, nodes and relationships within the Memgraph database.

Instructions:

- Use provided node and relationship labels and property names from the

schema which describes the database's structure. Upon receiving a user

question, synthesize the schema to craft a precise Cypher query that

directly corresponds to the user's intent.

- Generate valid executable Cypher queries on top of Memgraph database.

Any explanation, context, or additional information that is not a part

of the Cypher query syntax should be omitted entirely.

- Use Memgraph MAGE procedures instead of Neo4j APOC procedures.

- Do not include any explanations or apologies in your responses.

- Do not include any text except the generated Cypher statement.

- For queries that ask for information or functionalities outside the direct

generation of Cypher queries, use the Cypher query format to communicate

limitations or capabilities. For example: RETURN "I am designed to generate

Cypher queries based on the provided schema only."

Schema:

{schema}

With all the above information and instructions, generate Cypher query for the

user question.

If the user asks about PS5, Play Station 5 or PS 5, that is the platform called PlayStation 5.

The question is:

{question}"""

MEMGRAPH_GENERATION_PROMPT = PromptTemplate(

input_variables=["schema", "question"], template=MEMGRAPH_GENERATION_TEMPLATE

)

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

cypher_prompt=MEMGRAPH_GENERATION_PROMPT,

graph=graph,

model_name="gpt-4-turbo",

allow_dangerous_requests=True,

)

response = chain.invoke("Is Baldur's Gate 3 available on PS5?")

print(response["result"])

MATCH (:Game{name: "Baldur's Gate 3"})-[:AVAILABLE_ON]->(:Platform{name: "PlayStation 5"})

RETURN "Yes"

Yes, Baldur's Gate 3 is available on PS5.

現在,使用修訂後的初始 Cypher 提示詞(其中包含有關平台命名的指引),我們正在取得準確且相關的結果,這些結果更符合使用者查詢。

此方法可進一步改進您的 QA 鏈。您可以輕鬆地將額外的提示詞精煉資料整合到您的鏈中,從而增強您的應用程式的整體使用者體驗。

建構知識圖譜

將非結構化資料轉換為結構化資料並非易事或直接的任務。本指南將示範如何利用 LLM 在此方面為我們提供協助,以及如何在 Memgraph 中建構知識圖譜。建立知識圖譜後,您可以將其用於您的 GraphRAG 應用程式。

從文字建構知識圖譜的步驟如下

- 從文字擷取結構化資訊:LLM 用於從文字中擷取結構化圖形資訊,形式為節點和關係。

- 儲存到 Memgraph 中:將擷取的結構化圖形資訊儲存到 Memgraph 中。

從文字擷取結構化資訊

除了設定章節中的所有匯入之外,匯入 LLMGraphTransformer 和 Document,它們將用於從文字中擷取結構化資訊。

from langchain_core.documents import Document

from langchain_experimental.graph_transformers import LLMGraphTransformer

以下是有關查爾斯·達爾文 (來源) 的範例文字,知識圖譜將由此建構。

text = """

Charles Robert Darwin was an English naturalist, geologist, and biologist,

widely known for his contributions to evolutionary biology. His proposition that

all species of life have descended from a common ancestor is now generally

accepted and considered a fundamental scientific concept. In a joint

publication with Alfred Russel Wallace, he introduced his scientific theory that

this branching pattern of evolution resulted from a process he called natural

selection, in which the struggle for existence has a similar effect to the

artificial selection involved in selective breeding. Darwin has been

described as one of the most influential figures in human history and was

honoured by burial in Westminster Abbey.

"""

下一步是從所需的 LLM 初始化 LLMGraphTransformer,並將文件轉換為圖形結構。

llm = ChatOpenAI(temperature=0, model_name="gpt-4-turbo")

llm_transformer = LLMGraphTransformer(llm=llm)

documents = [Document(page_content=text)]

graph_documents = llm_transformer.convert_to_graph_documents(documents)

在底層,LLM 從文字中擷取重要的實體,並將它們以節點和關係清單的形式傳回。以下是它的外觀

print(graph_documents)

[GraphDocument(nodes=[Node(id='Charles Robert Darwin', type='Person', properties={}), Node(id='English', type='Nationality', properties={}), Node(id='Naturalist', type='Profession', properties={}), Node(id='Geologist', type='Profession', properties={}), Node(id='Biologist', type='Profession', properties={}), Node(id='Evolutionary Biology', type='Field', properties={}), Node(id='Common Ancestor', type='Concept', properties={}), Node(id='Scientific Concept', type='Concept', properties={}), Node(id='Alfred Russel Wallace', type='Person', properties={}), Node(id='Natural Selection', type='Concept', properties={}), Node(id='Selective Breeding', type='Concept', properties={}), Node(id='Westminster Abbey', type='Location', properties={})], relationships=[Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='English', type='Nationality', properties={}), type='NATIONALITY', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Naturalist', type='Profession', properties={}), type='PROFESSION', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Geologist', type='Profession', properties={}), type='PROFESSION', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Biologist', type='Profession', properties={}), type='PROFESSION', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Evolutionary Biology', type='Field', properties={}), type='CONTRIBUTION', properties={}), Relationship(source=Node(id='Common Ancestor', type='Concept', properties={}), target=Node(id='Scientific Concept', type='Concept', properties={}), type='BASIS', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Alfred Russel Wallace', type='Person', properties={}), type='COLLABORATION', properties={}), Relationship(source=Node(id='Natural Selection', type='Concept', properties={}), target=Node(id='Selective Breeding', type='Concept', properties={}), type='COMPARISON', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Westminster Abbey', type='Location', properties={}), type='BURIAL', properties={})], source=Document(metadata={}, page_content='\n Charles Robert Darwin was an English naturalist, geologist, and biologist,\n widely known for his contributions to evolutionary biology. His proposition that\n all species of life have descended from a common ancestor is now generally\n accepted and considered a fundamental scientific concept. In a joint\n publication with Alfred Russel Wallace, he introduced his scientific theory that\n this branching pattern of evolution resulted from a process he called natural\n selection, in which the struggle for existence has a similar effect to the\n artificial selection involved in selective breeding. Darwin has been\n described as one of the most influential figures in human history and was\n honoured by burial in Westminster Abbey.\n'))]

儲存到 Memgraph 中

一旦您以 GraphDocument 格式準備好資料(即節點和關係),您就可以使用 add_graph_documents 方法將其匯入到 Memgraph 中。該方法會將 graph_documents 清單轉換為需要在 Memgraph 中執行的適當 Cypher 查詢。完成後,知識圖譜會儲存在 Memgraph 中。

# Empty the database

graph.query("STORAGE MODE IN_MEMORY_ANALYTICAL")

graph.query("DROP GRAPH")

graph.query("STORAGE MODE IN_MEMORY_TRANSACTIONAL")

# Create KG

graph.add_graph_documents(graph_documents)

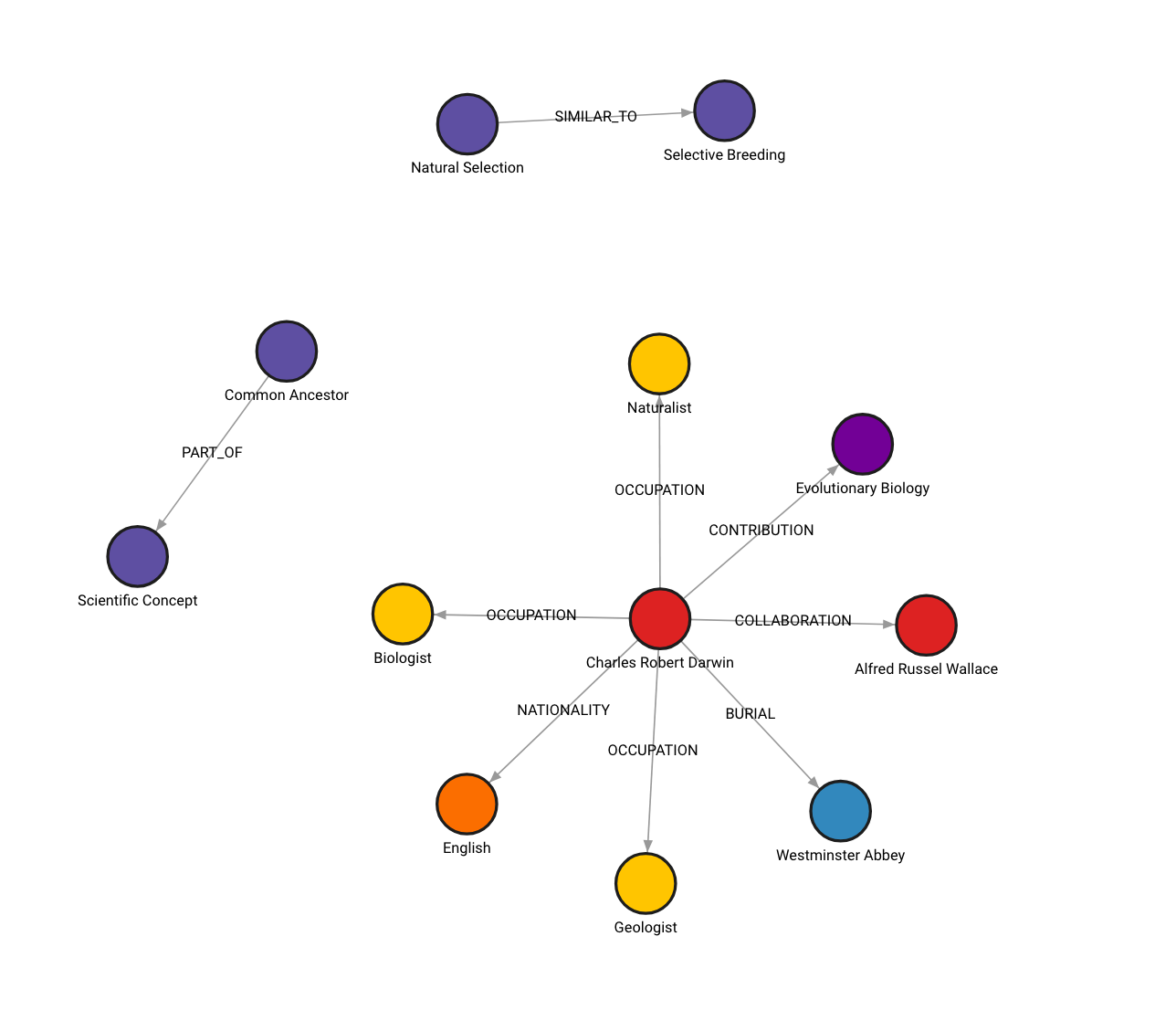

以下是圖形在 Memgraph Lab 中的外觀 (請查看 localhost:3000)

如果您嘗試過此操作並獲得不同的圖形,這是預期的行為。圖形建構過程是非確定性的,因為用於從非結構化資料產生節點和關係的 LLM 是非確定性的。

其他選項

此外,您可以彈性地定義要擷取的特定節點和關係類型,以符合您的需求。

llm_transformer_filtered = LLMGraphTransformer(

llm=llm,

allowed_nodes=["Person", "Nationality", "Concept"],

allowed_relationships=["NATIONALITY", "INVOLVED_IN", "COLLABORATES_WITH"],

)

graph_documents_filtered = llm_transformer_filtered.convert_to_graph_documents(

documents

)

print(f"Nodes:{graph_documents_filtered[0].nodes}")

print(f"Relationships:{graph_documents_filtered[0].relationships}")

Nodes:[Node(id='Charles Robert Darwin', type='Person', properties={}), Node(id='English', type='Nationality', properties={}), Node(id='Evolutionary Biology', type='Concept', properties={}), Node(id='Natural Selection', type='Concept', properties={}), Node(id='Alfred Russel Wallace', type='Person', properties={})]

Relationships:[Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='English', type='Nationality', properties={}), type='NATIONALITY', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Evolutionary Biology', type='Concept', properties={}), type='INVOLVED_IN', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Natural Selection', type='Concept', properties={}), type='INVOLVED_IN', properties={}), Relationship(source=Node(id='Charles Robert Darwin', type='Person', properties={}), target=Node(id='Alfred Russel Wallace', type='Person', properties={}), type='COLLABORATES_WITH', properties={})]

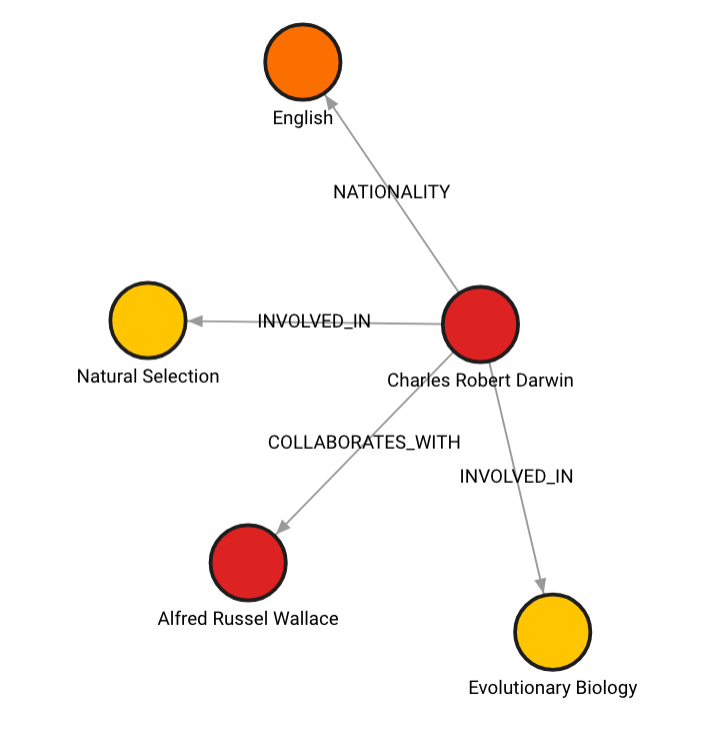

以下是這種情況下圖形的外觀

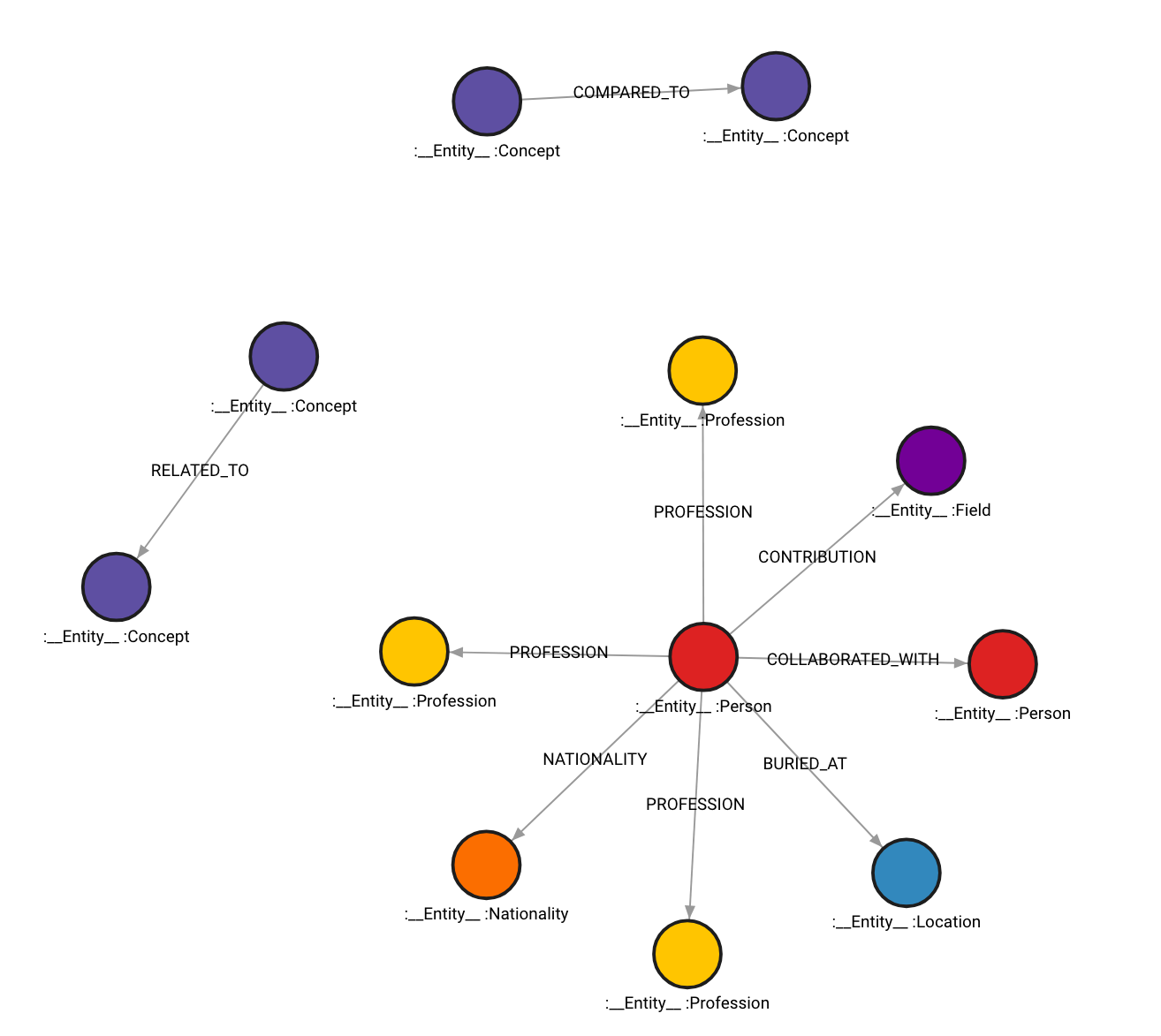

您的圖形也可以在所有節點上具有 __Entity__ 標籤,這些標籤將被編製索引以加快擷取速度。

# Drop graph

graph.query("STORAGE MODE IN_MEMORY_ANALYTICAL")

graph.query("DROP GRAPH")

graph.query("STORAGE MODE IN_MEMORY_TRANSACTIONAL")

# Store to Memgraph with Entity label

graph.add_graph_documents(graph_documents, baseEntityLabel=True)

以下是圖形的外觀

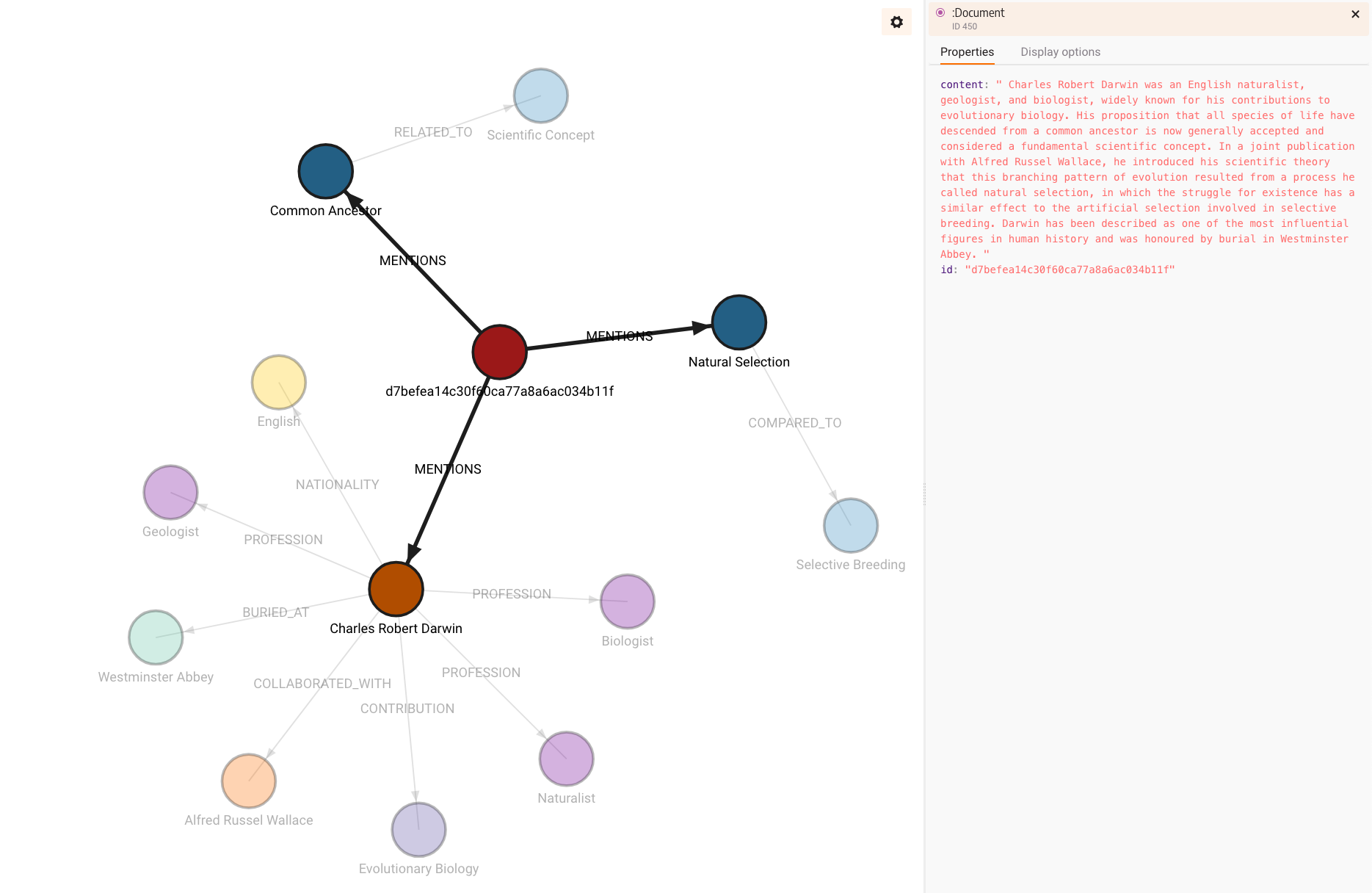

還有一個選項可以包含在圖形中取得的資訊來源。若要執行此操作,請將 include_source 設定為 True,然後來源文件會儲存起來,並使用 MENTIONS 關係連結到圖形中的節點。

# Drop graph

graph.query("STORAGE MODE IN_MEMORY_ANALYTICAL")

graph.query("DROP GRAPH")

graph.query("STORAGE MODE IN_MEMORY_TRANSACTIONAL")

# Store to Memgraph with source included

graph.add_graph_documents(graph_documents, include_source=True)

建構的圖形將如下所示

請注意來源的內容是如何儲存的,以及如何產生 id 屬性,因為文件沒有任何 id。您可以結合同時擁有 __Entity__ 標籤和文件來源。不過,請注意,兩者都會佔用記憶體,尤其是由於內容的長字串而包含的來源。

最後,您可以查詢知識圖譜,如先前章節所述

chain = MemgraphQAChain.from_llm(

ChatOpenAI(temperature=0),

graph=graph,

model_name="gpt-4-turbo",

allow_dangerous_requests=True,

)

print(chain.invoke("Who Charles Robert Darwin collaborated with?")["result"])

MATCH (:Person {id: "Charles Robert Darwin"})-[:COLLABORATION]->(collaborator)

RETURN collaborator;

Alfred Russel Wallace