建構檢索增強生成 (RAG) 應用程式:第 1 部分

由 LLM 驅動的最強大應用之一是複雜的問答 (Q&A) 聊天機器人。這些應用程式可以回答關於特定來源資訊的問題。這些應用程式使用一種稱為檢索增強生成或 RAG 的技術。

這是一個多部分教學課程

本教學課程將展示如何建構一個基於文字資料來源的簡單問答應用程式。在這個過程中,我們將介紹典型的問答架構,並重點介紹更多進階問答技術的其他資源。我們也將了解 LangSmith 如何幫助我們追蹤和理解我們的應用程式。隨著我們的應用程式複雜性增加,LangSmith 將變得越來越有幫助。

如果您已經熟悉基本檢索,您可能也會對這篇 不同檢索技術的高階概述 感興趣。

注意:這裡我們專注於非結構化資料的問答。如果您對基於結構化資料的 RAG 感興趣,請查看我們關於 基於 SQL 資料進行問答 的教學課程。

概觀

典型的 RAG 應用程式有兩個主要組件

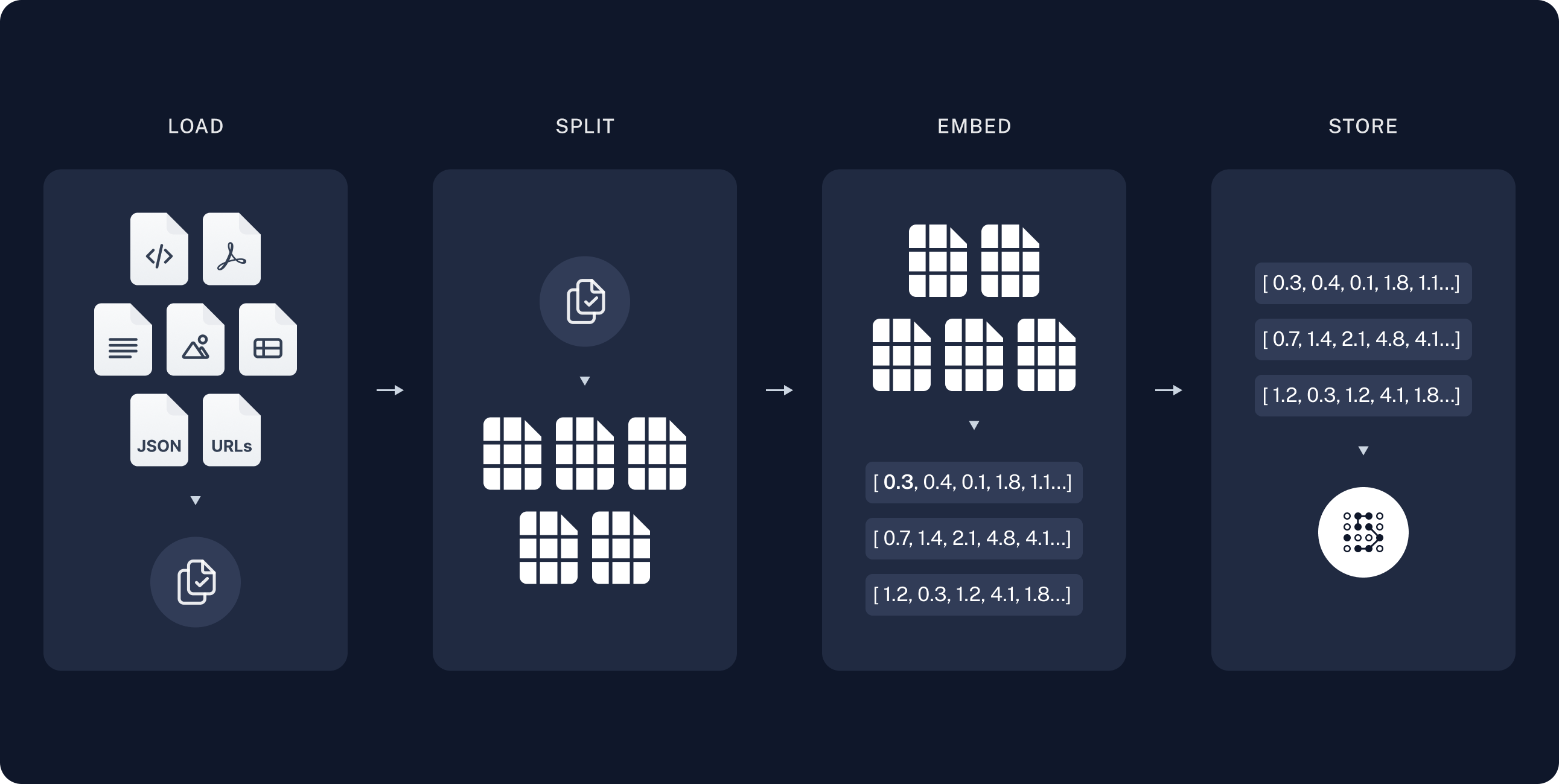

索引:用於從來源擷取資料並為其建立索引的管線。這通常離線進行。

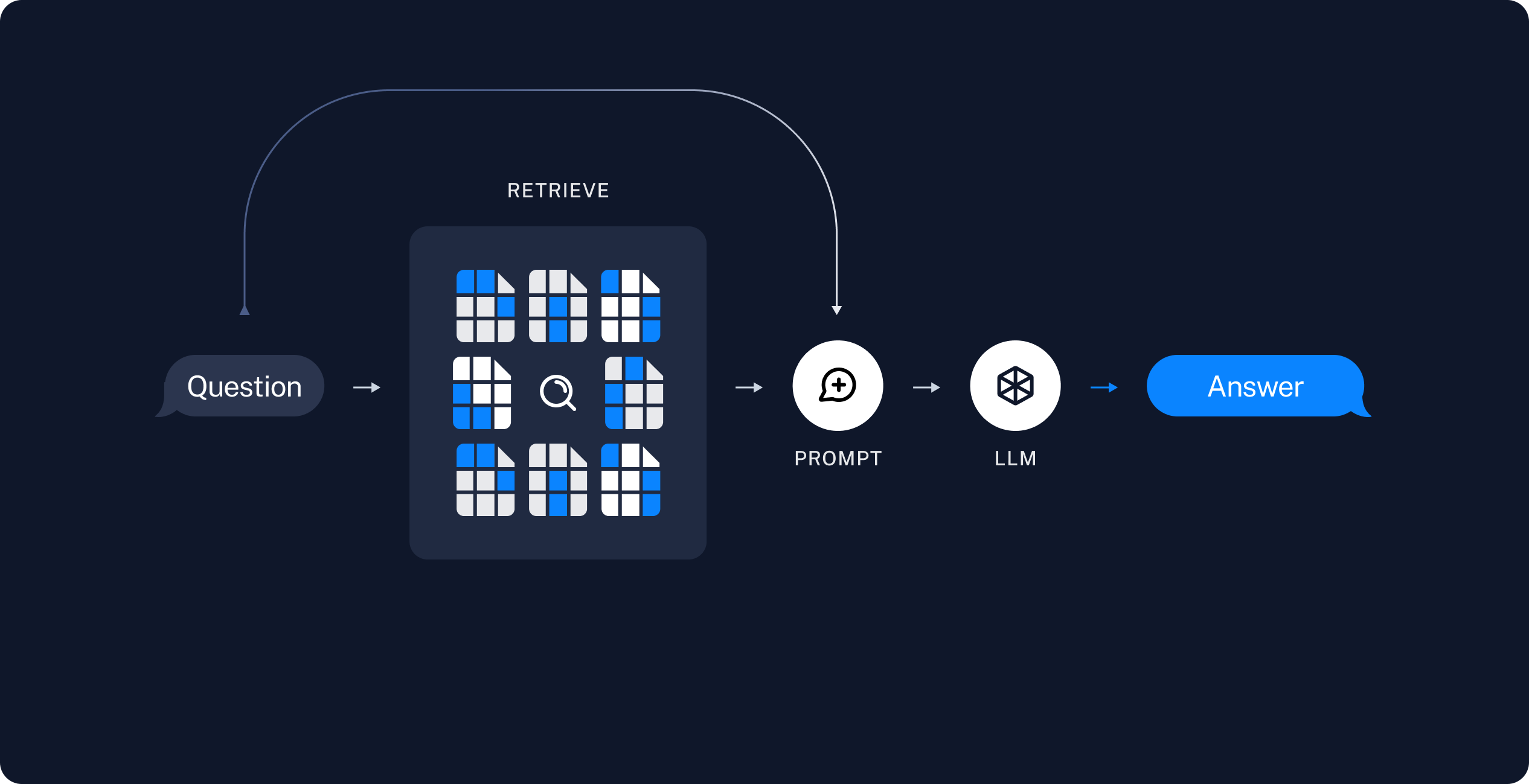

檢索和生成:實際的 RAG 鏈,它在運行時獲取使用者查詢,並從索引中檢索相關資料,然後將其傳遞給模型。

注意:本教學課程的索引部分將主要遵循語意搜尋教學課程。

從原始資料到答案的最常見完整順序如下所示

索引

- 載入:首先我們需要載入我們的資料。這可以使用 文件載入器 完成。

- 分割:文字分割器 將大型

Documents分割成較小的區塊。這對於索引資料和將其傳遞到模型都很有用,因為大型區塊更難搜尋,並且無法放入模型的有限上下文視窗中。 - 儲存:我們需要某個地方來儲存和索引我們的分割區塊,以便稍後可以對其進行搜尋。這通常使用 向量儲存區 和 嵌入 模型完成。

檢索和生成

一旦我們索引了我們的資料,我們將使用 LangGraph 作為我們的協調框架來實作檢索和生成步驟。

設定

Jupyter Notebook

本教學課程和其他教學課程最方便在 Jupyter Notebook 中執行。在互動式環境中瀏覽指南是更好地理解它們的好方法。請參閱這裡以獲取有關如何安裝的說明。

安裝

本教學課程需要這些 langchain 依賴項

- Pip

- Conda

%pip install --quiet --upgrade langchain-text-splitters langchain-community langgraph

conda install langchain-text-splitters langchain-community langgraph -c conda-forge

有關更多詳細資訊,請參閱我們的 安裝指南。

LangSmith

您使用 LangChain 建構的許多應用程式將包含多個步驟,其中包含多次 LLM 呼叫。隨著這些應用程式變得越來越複雜,能夠檢查您的鏈或代理內部到底發生了什麼變得至關重要。執行此操作的最佳方法是使用 LangSmith。

在上面的連結註冊後,請確保設定您的環境變數以開始記錄追蹤

export LANGSMITH_TRACING="true"

export LANGSMITH_API_KEY="..."

或者,如果在 Notebook 中,您可以使用以下程式碼設定它們

import getpass

import os

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_API_KEY"] = getpass.getpass()

組件

我們需要從 LangChain 的整合套件中選擇三個組件。

pip install -qU "langchain[openai]"

import getpass

import os

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter API key for OpenAI: ")

from langchain.chat_models import init_chat_model

llm = init_chat_model("gpt-4o-mini", model_provider="openai")

pip install -qU langchain-openai

import getpass

import os

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter API key for OpenAI: ")

from langchain_openai import OpenAIEmbeddings

embeddings = OpenAIEmbeddings(model="text-embedding-3-large")

pip install -qU langchain-core

from langchain_core.vectorstores import InMemoryVectorStore

vector_store = InMemoryVectorStore(embeddings)

預覽

在本指南中,我們將建構一個應用程式,用於回答有關網站內容的問題。我們將使用的特定網站是 Lilian Weng 的 LLM Powered Autonomous Agents 部落格文章,這允許我們詢問有關文章內容的問題。

我們可以建立一個簡單的索引管線和 RAG 鏈,用大約 50 行程式碼來完成此操作。

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.documents import Document

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langgraph.graph import START, StateGraph

from typing_extensions import List, TypedDict

# Load and chunk contents of the blog

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(docs)

# Index chunks

_ = vector_store.add_documents(documents=all_splits)

# Define prompt for question-answering

prompt = hub.pull("rlm/rag-prompt")

# Define state for application

class State(TypedDict):

question: str

context: List[Document]

answer: str

# Define application steps

def retrieve(state: State):

retrieved_docs = vector_store.similarity_search(state["question"])

return {"context": retrieved_docs}

def generate(state: State):

docs_content = "\n\n".join(doc.page_content for doc in state["context"])

messages = prompt.invoke({"question": state["question"], "context": docs_content})

response = llm.invoke(messages)

return {"answer": response.content}

# Compile application and test

graph_builder = StateGraph(State).add_sequence([retrieve, generate])

graph_builder.add_edge(START, "retrieve")

graph = graph_builder.compile()

response = graph.invoke({"question": "What is Task Decomposition?"})

print(response["answer"])

Task Decomposition is the process of breaking down a complicated task into smaller, manageable steps to facilitate easier execution and understanding. Techniques like Chain of Thought (CoT) and Tree of Thoughts (ToT) guide models to think step-by-step, allowing them to explore multiple reasoning possibilities. This method enhances performance on complex tasks and provides insight into the model's thinking process.

查看 LangSmith 追蹤。

詳細逐步解說

讓我們逐步了解上面的程式碼,以真正理解正在發生的事情。

1. 索引

載入文件

我們需要先載入部落格文章內容。我們可以為此使用 DocumentLoader,它們是從來源載入資料並返回 Document 物件清單的物件。

在本例中,我們將使用 WebBaseLoader,它使用 urllib 從網頁 URL 載入 HTML,並使用 BeautifulSoup 將其解析為文字。我們可以透過經由 bs_kwargs 將參數傳遞到 BeautifulSoup 解析器中來自訂 HTML -> 文字解析(請參閱 BeautifulSoup 文件)。在本例中,只有具有類別「post-content」、「post-title」或「post-header」的 HTML 標籤是相關的,因此我們將移除所有其他標籤。

import bs4

from langchain_community.document_loaders import WebBaseLoader

# Only keep post title, headers, and content from the full HTML.

bs4_strainer = bs4.SoupStrainer(class_=("post-title", "post-header", "post-content"))

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs={"parse_only": bs4_strainer},

)

docs = loader.load()

assert len(docs) == 1

print(f"Total characters: {len(docs[0].page_content)}")

Total characters: 43131

print(docs[0].page_content[:500])

LLM Powered Autonomous Agents

Date: June 23, 2023 | Estimated Reading Time: 31 min | Author: Lilian Weng

Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.

Agent System Overview#

In

深入了解

DocumentLoader:從來源以 Documents 清單形式載入資料的物件。

分割文件

我們載入的文件超過 42k 個字元,這太長而無法放入許多模型的上下文視窗中。即使對於那些可以將完整文章放入其上下文視窗中的模型,模型也可能難以在非常長的輸入中找到資訊。

為了處理這個問題,我們將 Document 分割成區塊,以便進行嵌入和向量儲存。這應該有助於我們在運行時僅檢索部落格文章中最相關的部分。

與 語意搜尋教學課程 中一樣,我們使用 RecursiveCharacterTextSplitter,它將使用常見的分隔符(如換行符)遞迴分割文件,直到每個區塊都達到適當的大小。這是通用文字使用案例的建議文字分割器。

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000, # chunk size (characters)

chunk_overlap=200, # chunk overlap (characters)

add_start_index=True, # track index in original document

)

all_splits = text_splitter.split_documents(docs)

print(f"Split blog post into {len(all_splits)} sub-documents.")

Split blog post into 66 sub-documents.

深入了解

TextSplitter:將 Document 清單分割成較小區塊的物件。DocumentTransformer 的子類別。

- 透過閱讀操作指南文件,了解有關使用不同方法分割文字的更多資訊

- 程式碼 (py 或 js)

- 學術論文

- 介面:基礎介面的 API 參考。

DocumentTransformer:對 Document 物件清單執行轉換的物件。

儲存文件

現在我們需要為我們的 66 個文字區塊建立索引,以便我們可以在運行時搜尋它們。依照語意搜尋教學課程,我們的方法是嵌入每個文件分割區塊的內容,並將這些嵌入插入向量儲存區。給定輸入查詢,然後我們可以使用向量搜尋來檢索相關文件。

我們可以使用向量儲存區和嵌入模型,在教學課程開始時選擇的單一命令中嵌入和儲存我們所有的文件分割區塊。

document_ids = vector_store.add_documents(documents=all_splits)

print(document_ids[:3])

['07c18af6-ad58-479a-bfb1-d508033f9c64', '9000bf8e-1993-446f-8d4d-f4e507ba4b8f', 'ba3b5d14-bed9-4f5f-88be-44c88aedc2e6']

深入了解

Embeddings:文字嵌入模型的封裝器,用於將文字轉換為嵌入。

VectorStore:向量資料庫的封裝器,用於儲存和查詢嵌入。

這完成了管線的索引部分。此時,我們有一個可查詢的向量儲存區,其中包含我們部落格文章的分塊內容。給定使用者問題,我們應該理想地能夠返回回答問題的部落格文章片段。

2. 檢索和生成

現在讓我們編寫實際的應用程式邏輯。我們想要建立一個簡單的應用程式,它接受使用者問題,搜尋與該問題相關的文件,將檢索到的文件和初始問題傳遞給模型,並返回答案。

對於生成,我們將使用在教學課程開始時選擇的聊天模型。

我們將使用 RAG 的提示,該提示已簽入 LangChain 提示中心(此處)。

from langchain import hub

prompt = hub.pull("rlm/rag-prompt")

example_messages = prompt.invoke(

{"context": "(context goes here)", "question": "(question goes here)"}

).to_messages()

assert len(example_messages) == 1

print(example_messages[0].content)

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: (question goes here)

Context: (context goes here)

Answer:

我們將使用 LangGraph 將檢索和生成步驟連結到單一應用程式中。這將帶來許多好處

- 我們可以定義一次應用程式邏輯,並自動支援多種調用模式,包括串流、非同步和批次呼叫。

- 我們可以透過 LangGraph Platform 獲得簡化的部署。

- LangSmith 將自動一起追蹤我們應用程式的步驟。

- 我們可以輕鬆地為我們的應用程式新增關鍵功能,包括持久性和 人為迴路批准,只需極少的程式碼變更。

要使用 LangGraph,我們需要定義三件事

- 我們應用程式的狀態;

- 我們應用程式的節點(即應用程式步驟);

- 我們應用程式的「控制流程」(例如,步驟的順序)。

狀態:

我們應用程式的狀態控制應用程式的輸入資料、步驟之間傳輸的資料以及應用程式輸出的資料。它通常是 TypedDict,但也可以是 Pydantic BaseModel。

對於簡單的 RAG 應用程式,我們只需追蹤輸入問題、檢索到的上下文和產生的答案

from langchain_core.documents import Document

from typing_extensions import List, TypedDict

class State(TypedDict):

question: str

context: List[Document]

answer: str

節點(應用程式步驟)

讓我們從兩個步驟的簡單順序開始:檢索和生成。

def retrieve(state: State):

retrieved_docs = vector_store.similarity_search(state["question"])

return {"context": retrieved_docs}

def generate(state: State):

docs_content = "\n\n".join(doc.page_content for doc in state["context"])

messages = prompt.invoke({"question": state["question"], "context": docs_content})

response = llm.invoke(messages)

return {"answer": response.content}

我們的檢索步驟只是使用輸入問題運行相似性搜尋,而生成步驟將檢索到的上下文和原始問題格式化為聊天模型的提示。

控制流程

最後,我們將我們的應用程式編譯成單一 graph 物件。在本例中,我們只是將檢索和生成步驟連接成單一順序。

from langgraph.graph import START, StateGraph

graph_builder = StateGraph(State).add_sequence([retrieve, generate])

graph_builder.add_edge(START, "retrieve")

graph = graph_builder.compile()

LangGraph 還隨附用於視覺化應用程式控制流程的內建實用程式

from IPython.display import Image, display

display(Image(graph.get_graph().draw_mermaid_png()))

我需要使用 LangGraph 嗎?

LangGraph 不是建構 RAG 應用程式的必要條件。實際上,我們可以透過調用個別組件來實作相同的應用程式邏輯

question = "..."

retrieved_docs = vector_store.similarity_search(question)

docs_content = "\n\n".join(doc.page_content for doc in retrieved_docs)

prompt = prompt.invoke({"question": question, "context": docs_content})

answer = llm.invoke(prompt)

LangGraph 的優點包括

- 支援多種調用模式:如果我們想要串流輸出 Token,或串流個別步驟的結果,則需要重寫此邏輯;

- 自動支援透過 LangSmith 進行追蹤,以及透過 LangGraph Platform 進行部署;

- 支援持久性、人為迴路和其他功能。

許多使用案例要求在對話體驗中使用 RAG,以便使用者可以透過有狀態的對話接收上下文知情的答案。正如我們將在教學課程的 第 2 部分 中看到的那樣,LangGraph 對狀態的管理和持久性極大地簡化了這些應用程式。

用法

讓我們測試我們的應用程式!LangGraph 支援多種調用模式,包括同步、非同步和串流。

調用

result = graph.invoke({"question": "What is Task Decomposition?"})

print(f'Context: {result["context"]}\n\n')

print(f'Answer: {result["answer"]}')

Context: [Document(id='a42dc78b-8f76-472a-9e25-180508af74f3', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 1585}, page_content='Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.'), Document(id='c0e45887-d0b0-483d-821a-bb5d8316d51d', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 2192}, page_content='Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.'), Document(id='4cc7f318-35f5-440f-a4a4-145b5f0b918d', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 29630}, page_content='Resources:\n1. Internet access for searches and information gathering.\n2. Long Term memory management.\n3. GPT-3.5 powered Agents for delegation of simple tasks.\n4. File output.\n\nPerformance Evaluation:\n1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.\n2. Constructively self-criticize your big-picture behavior constantly.\n3. Reflect on past decisions and strategies to refine your approach.\n4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.'), Document(id='f621ade4-9b0d-471f-a522-44eb5feeba0c', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 19373}, page_content="(3) Task execution: Expert models execute on the specific tasks and log results.\nInstruction:\n\nWith the input and the inference results, the AI assistant needs to describe the process and results. The previous stages can be formed as - User Input: {{ User Input }}, Task Planning: {{ Tasks }}, Model Selection: {{ Model Assignment }}, Task Execution: {{ Predictions }}. You must first answer the user's request in a straightforward manner. Then describe the task process and show your analysis and model inference results to the user in the first person. If inference results contain a file path, must tell the user the complete file path.")]

Answer: Task decomposition is a technique used to break down complex tasks into smaller, manageable steps, allowing for more efficient problem-solving. This can be achieved through methods like chain of thought prompting or the tree of thoughts approach, which explores multiple reasoning possibilities at each step. It can be initiated through simple prompts, task-specific instructions, or human inputs.

串流步驟

for step in graph.stream(

{"question": "What is Task Decomposition?"}, stream_mode="updates"

):

print(f"{step}\n\n----------------\n")

{'retrieve': {'context': [Document(id='a42dc78b-8f76-472a-9e25-180508af74f3', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 1585}, page_content='Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.'), Document(id='c0e45887-d0b0-483d-821a-bb5d8316d51d', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 2192}, page_content='Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.'), Document(id='4cc7f318-35f5-440f-a4a4-145b5f0b918d', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 29630}, page_content='Resources:\n1. Internet access for searches and information gathering.\n2. Long Term memory management.\n3. GPT-3.5 powered Agents for delegation of simple tasks.\n4. File output.\n\nPerformance Evaluation:\n1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.\n2. Constructively self-criticize your big-picture behavior constantly.\n3. Reflect on past decisions and strategies to refine your approach.\n4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.'), Document(id='f621ade4-9b0d-471f-a522-44eb5feeba0c', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 19373}, page_content="(3) Task execution: Expert models execute on the specific tasks and log results.\nInstruction:\n\nWith the input and the inference results, the AI assistant needs to describe the process and results. The previous stages can be formed as - User Input: {{ User Input }}, Task Planning: {{ Tasks }}, Model Selection: {{ Model Assignment }}, Task Execution: {{ Predictions }}. You must first answer the user's request in a straightforward manner. Then describe the task process and show your analysis and model inference results to the user in the first person. If inference results contain a file path, must tell the user the complete file path.")]}}

----------------

{'generate': {'answer': 'Task decomposition is the process of breaking down a complex task into smaller, more manageable steps. This technique, often enhanced by methods like Chain of Thought (CoT) or Tree of Thoughts, allows models to reason through tasks systematically and improves performance by clarifying the thought process. It can be achieved through simple prompts, task-specific instructions, or human inputs.'}}

----------------

串流 Token

for message, metadata in graph.stream(

{"question": "What is Task Decomposition?"}, stream_mode="messages"

):

print(message.content, end="|")

|Task| decomposition| is| the| process| of| breaking| down| complex| tasks| into| smaller|,| more| manageable| steps|.| It| can| be| achieved| through| techniques| like| Chain| of| Thought| (|Co|T|)| prompting|,| which| encourages| the| model| to| think| step| by| step|,| or| through| more| structured| methods| like| the| Tree| of| Thoughts|.| This| approach| not| only| simplifies| task| execution| but| also| provides| insights| into| the| model|'s| reasoning| process|.||

對於非同步調用,請使用

result = await graph.ainvoke(...)

和

async for step in graph.astream(...):

返回來源

請注意,透過將檢索到的上下文儲存在圖形的狀態中,我們可以在狀態的 "context" 欄位中恢復模型產生的答案的來源。請參閱 本指南,了解有關返回來源的更多詳細資訊。

深入了解

聊天模型 接受一系列訊息並返回訊息。

自訂提示

如上所示,我們可以從提示中心載入提示(例如,此 RAG 提示)。提示也可以輕鬆自訂。例如

from langchain_core.prompts import PromptTemplate

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

Always say "thanks for asking!" at the end of the answer.

{context}

Question: {question}

Helpful Answer:"""

custom_rag_prompt = PromptTemplate.from_template(template)

查詢分析

到目前為止,我們正在使用原始輸入查詢執行檢索。但是,允許模型產生查詢以進行檢索有一些優勢。例如

- 除了語意搜尋外,我們還可以建置結構化篩選器(例如,「尋找 2020 年之後的文件。」);

- 模型可以將使用者查詢(可能是多方面的或包含不相關的語言)重寫為更有效的搜尋查詢。

查詢分析 採用模型從原始使用者輸入轉換或建構最佳化的搜尋查詢。我們可以輕鬆地將查詢分析步驟整合到我們的應用程式中。為了說明目的,讓我們在向量儲存庫中的文件中新增一些 metadata。我們將在文件中新增一些(虛構的)章節,以便稍後進行篩選。

total_documents = len(all_splits)

third = total_documents // 3

for i, document in enumerate(all_splits):

if i < third:

document.metadata["section"] = "beginning"

elif i < 2 * third:

document.metadata["section"] = "middle"

else:

document.metadata["section"] = "end"

all_splits[0].metadata

{'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/',

'start_index': 8,

'section': 'beginning'}

我們需要更新向量儲存庫中的文件。我們將使用簡單的 InMemoryVectorStore 來完成此操作,因為我們將使用它的一些特定功能(即 metadata 篩選)。請參閱向量儲存庫整合文件,以了解您選擇的向量儲存庫的相關功能。

from langchain_core.vectorstores import InMemoryVectorStore

vector_store = InMemoryVectorStore(embeddings)

_ = vector_store.add_documents(all_splits)

接下來讓我們定義搜尋查詢的 schema。我們將使用結構化輸出 來達到此目的。在這裡,我們將查詢定義為包含字串查詢和文件章節(“beginning”、“middle” 或 “end”),但您可以根據自己的喜好定義。

from typing import Literal

from typing_extensions import Annotated

class Search(TypedDict):

"""Search query."""

query: Annotated[str, ..., "Search query to run."]

section: Annotated[

Literal["beginning", "middle", "end"],

...,

"Section to query.",

]

最後,我們在 LangGraph 應用程式中新增一個步驟,以從使用者的原始輸入產生查詢

class State(TypedDict):

question: str

query: Search

context: List[Document]

answer: str

def analyze_query(state: State):

structured_llm = llm.with_structured_output(Search)

query = structured_llm.invoke(state["question"])

return {"query": query}

def retrieve(state: State):

query = state["query"]

retrieved_docs = vector_store.similarity_search(

query["query"],

filter=lambda doc: doc.metadata.get("section") == query["section"],

)

return {"context": retrieved_docs}

def generate(state: State):

docs_content = "\n\n".join(doc.page_content for doc in state["context"])

messages = prompt.invoke({"question": state["question"], "context": docs_content})

response = llm.invoke(messages)

return {"answer": response.content}

graph_builder = StateGraph(State).add_sequence([analyze_query, retrieve, generate])

graph_builder.add_edge(START, "analyze_query")

graph = graph_builder.compile()

完整程式碼

from typing import Literal

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.documents import Document

from langchain_core.vectorstores import InMemoryVectorStore

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langgraph.graph import START, StateGraph

from typing_extensions import Annotated, List, TypedDict

# Load and chunk contents of the blog

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(docs)

# Update metadata (illustration purposes)

total_documents = len(all_splits)

third = total_documents // 3

for i, document in enumerate(all_splits):

if i < third:

document.metadata["section"] = "beginning"

elif i < 2 * third:

document.metadata["section"] = "middle"

else:

document.metadata["section"] = "end"

# Index chunks

vector_store = InMemoryVectorStore(embeddings)

_ = vector_store.add_documents(all_splits)

# Define schema for search

class Search(TypedDict):

"""Search query."""

query: Annotated[str, ..., "Search query to run."]

section: Annotated[

Literal["beginning", "middle", "end"],

...,

"Section to query.",

]

# Define prompt for question-answering

prompt = hub.pull("rlm/rag-prompt")

# Define state for application

class State(TypedDict):

question: str

query: Search

context: List[Document]

answer: str

def analyze_query(state: State):

structured_llm = llm.with_structured_output(Search)

query = structured_llm.invoke(state["question"])

return {"query": query}

def retrieve(state: State):

query = state["query"]

retrieved_docs = vector_store.similarity_search(

query["query"],

filter=lambda doc: doc.metadata.get("section") == query["section"],

)

return {"context": retrieved_docs}

def generate(state: State):

docs_content = "\n\n".join(doc.page_content for doc in state["context"])

messages = prompt.invoke({"question": state["question"], "context": docs_content})

response = llm.invoke(messages)

return {"answer": response.content}

graph_builder = StateGraph(State).add_sequence([analyze_query, retrieve, generate])

graph_builder.add_edge(START, "analyze_query")

graph = graph_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

我們可以透過特別要求文章結尾的上下文來測試我們的實作。請注意,模型在其答案中包含不同的資訊。

for step in graph.stream(

{"question": "What does the end of the post say about Task Decomposition?"},

stream_mode="updates",

):

print(f"{step}\n\n----------------\n")

{'analyze_query': {'query': {'query': 'Task Decomposition', 'section': 'end'}}}

----------------

{'retrieve': {'context': [Document(id='d6cef137-e1e8-4ddc-91dc-b62bd33c6020', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 39221, 'section': 'end'}, page_content='Finite context length: The restricted context capacity limits the inclusion of historical information, detailed instructions, API call context, and responses. The design of the system has to work with this limited communication bandwidth, while mechanisms like self-reflection to learn from past mistakes would benefit a lot from long or infinite context windows. Although vector stores and retrieval can provide access to a larger knowledge pool, their representation power is not as powerful as full attention.\n\n\nChallenges in long-term planning and task decomposition: Planning over a lengthy history and effectively exploring the solution space remain challenging. LLMs struggle to adjust plans when faced with unexpected errors, making them less robust compared to humans who learn from trial and error.'), Document(id='d1834ae1-eb6a-43d7-a023-08dfa5028799', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 39086, 'section': 'end'}, page_content='}\n]\nChallenges#\nAfter going through key ideas and demos of building LLM-centered agents, I start to see a couple common limitations:'), Document(id='ca7f06e4-2c2e-4788-9a81-2418d82213d9', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 32942, 'section': 'end'}, page_content='}\n]\nThen after these clarification, the agent moved into the code writing mode with a different system message.\nSystem message:'), Document(id='1fcc2736-30f4-4ef6-90f2-c64af92118cb', metadata={'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'start_index': 35127, 'section': 'end'}, page_content='"content": "You will get instructions for code to write.\\nYou will write a very long answer. Make sure that every detail of the architecture is, in the end, implemented as code.\\nMake sure that every detail of the architecture is, in the end, implemented as code.\\n\\nThink step by step and reason yourself to the right decisions to make sure we get it right.\\nYou will first lay out the names of the core classes, functions, methods that will be necessary, as well as a quick comment on their purpose.\\n\\nThen you will output the content of each file including ALL code.\\nEach file must strictly follow a markdown code block format, where the following tokens must be replaced such that\\nFILENAME is the lowercase file name including the file extension,\\nLANG is the markup code block language for the code\'s language, and CODE is the code:\\n\\nFILENAME\\n\`\`\`LANG\\nCODE\\n\`\`\`\\n\\nYou will start with the \\"entrypoint\\" file, then go to the ones that are imported by that file, and so on.\\nPlease')]}}

----------------

{'generate': {'answer': 'The end of the post highlights that task decomposition faces challenges in long-term planning and adapting to unexpected errors. LLMs struggle with adjusting their plans, making them less robust compared to humans who learn from trial and error. This indicates a limitation in effectively exploring the solution space and handling complex tasks.'}}

----------------

在串流步驟和 LangSmith 追蹤中,我們現在可以觀察到已饋送到檢索步驟的結構化查詢。

查詢分析是一個豐富的問題,具有廣泛的方法。請參閱操作指南以取得更多範例。

下一步

我們已涵蓋建置基本資料問答應用程式的步驟

- 使用 Document Loader 載入資料

- 使用 Text Splitter 將索引資料分塊,使其更易於模型使用

- 嵌入資料 並將資料儲存在 向量儲存庫 中

- 檢索 先前儲存的區塊以回應傳入的問題

- 使用檢索到的區塊作為上下文來產生答案。

在本教學課程的 第 2 部分 中,我們將擴展此處的實作,以適應對話式互動和多步驟檢索流程。

延伸閱讀